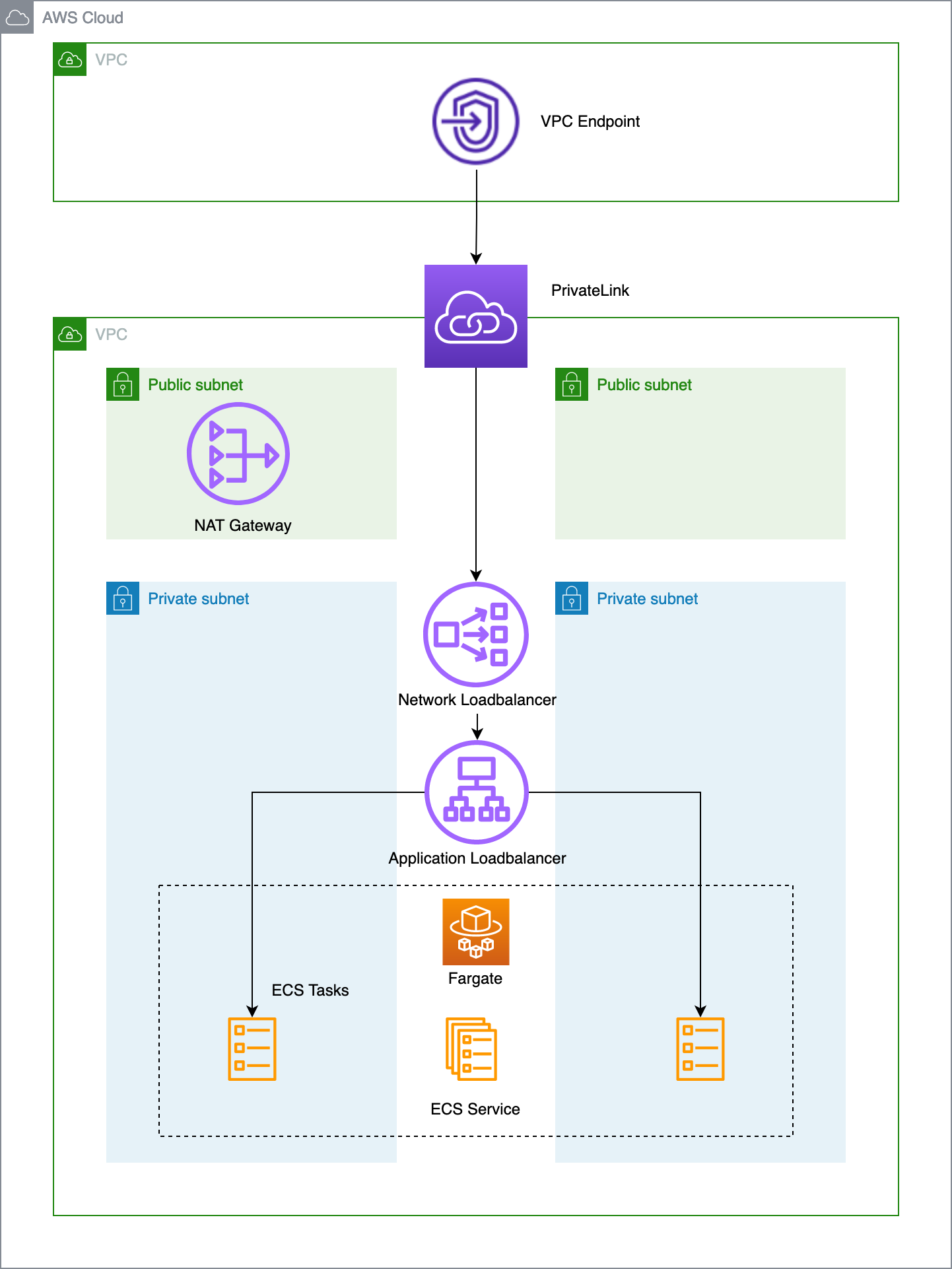

Many companies are using container orchestration services (like ECS or EKS) to host their microservice environment. Those microservices can offer APIs which need to be accessible for other customers. If those customers are also using AWS then the best solution would be to keep all communication privately inside AWS. The services inside the VPC of the customer should be able to communicate with the containers hosted in the VPC of the provider which offers the APIs. In this demo I’ll use ECS to host the API.

Architecture

I’ll use one AWS account, but two VPCs. One VPC for the API provider and one VPC for the customer (= API consumer). In reality they will both have a separate account but that does not make a big difference in this setup (see later). Another important remark is that I’ll serve my API over HTTP to keep things basic. It’s recommended to use HTTPS.

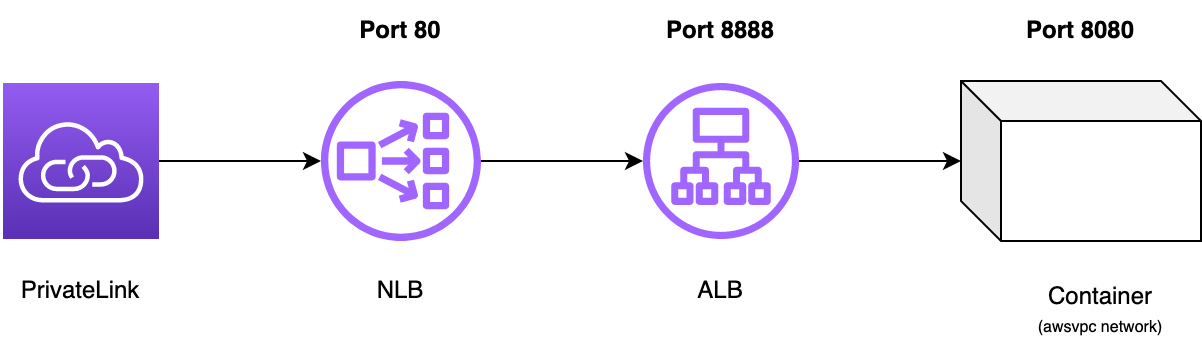

The API provider is hosting their containers in ECS using Fargate (serverless) and uses a private Application Load Balancer (ALB) in front of it. To allow a private connection between the VPC of the customer and the VPC of the API provider, we need to setup AWS PrivateLink. AWS PrivateLink can provide a private connectivity between VPCs or AWS services. It’s not possible to connect PrivateLink with an Application Load Balancer. It requires a Network Load Balancer (NLB). This means our architecture will look like this:

The sample API being used in this demo is a task API which can print all tasks and specific tasks based on id.

$ docker run -d -p 8080:8080 lvthillo/python-flask-api

$ curl localhost/api/tasks

[{"id": 1, "name": "task1", "description": "This is task 1"}, {"id": 2, "name": "task2", "description": "This is task 2"}, {"id": 3, "name": "task3", "description": "This is task 3"}]%

$ curl localhost/api/task/2

{"id": 2, "name": "task2", "description": "This is task 2"}

To set up the infrastructure I’m using Terraform. The code is available on my GitHub.

We need to create two VPCs. I’ve created a basic module which will create a VPC which contains four subnets (in two AZs) and deploy a NAT Gateway and an Internet Gateway. Also the route tables are configured properly.

module "network" {

source = "./modules/network"

vpc_name = "vpc-1"

vpc_cidr = var.vpc

subnet_1a_public_cidr = var.pub_sub_1a

subnet_1b_public_cidr = var.pub_sub_1b

subnet_1a_private_cidr = var.priv_sub_1a

subnet_1b_private_cidr = var.priv_sub_1b

}

Next we need to deploy the ECS cluster, service and task definition inside the VPC of the API provider. The ALB will be deployed in front of it.

The ecs module will deploy two sample API containers.

module "ecs" {

source = "./modules/ecs"

ecs_subnets = module.network.private_subnets

ecs_container_name = "demo"

ecs_port = var.ecs_port # we use networkMode awsvpc so host and container ports should match

ecs_task_def_name = "demo-task"

ecs_docker_image = "lvthillo/python-flask-api"

vpc_id = module.network.vpc_id

alb_sg = module.alb.alb_sg

alb_target_group_arn = module.alb.alb_tg_arn

}

module "alb" {

source = "./modules/alb"

alb_subnets = module.network.private_subnets

vpc_id = module.network.vpc_id

ecs_sg = module.ecs.ecs_sg

alb_port = var.alb_port

ecs_port = var.ecs_port

default_vpc_sg = module.network.default_vpc_sg

vpc_cidr = var.vpc # Allow inbound client traffic via AWS PrivateLink on the load balancer listener port

}

The security group of the ECS service only allows connections from the ALB.

resource "aws_security_group_rule" "ingress" {

type = "ingress"

description = "Allow ALB to ECS"

from_port = var.ecs_port

to_port = var.ecs_port

protocol = "tcp"

security_group_id = aws_security_group.ecs_sg.id

source_security_group_id = var.alb_sg

}

An NLB has no security group so we cannot reference it in the security group of the ALB. We need to find another way to restrict ALB access.

The alb module uses the VPC subnet - in which we will create our NLB - as source in the ALB security group. This means everything inside the API provider its VPC can communicate with the ALB.

In a production environment, it can be useful to deploy the NLB in separate NLB subnets and restrict those subnet CIDR ranges.

Now let’s deploy this private NLB in front of our private ALB.

module "nlb" {

source = "./modules/nlb"

nlb_subnets = module.network.private_subnets

vpc_id = module.network.vpc_id

alb_arn = module.alb.alb_arn

nlb_port = var.nlb_port

alb_listener_port = module.alb.alb_listener_port

}

Here I’m using some new AWS magic. Since September 2021 it’s possible to register an ALB as target of an NLB.

This was not possible in the past. I created some Terraform module which deployed a Lambda to monitor the IPs of the ALB and updated the NLB target group when needed. I’m very happy that it’s not needed anymore!

Now we can define alb as target_type for our NLB target group.

resource "aws_lb_target_group" "nlb_tg" {

name = var.nlb_tg_name

port = var.alb_listener_port

protocol = "TCP"

target_type = "alb"

vpc_id = var.vpc_id

}

Next we can deploy our AWS PrivateLink-powered service (referred to as an endpoint service). In a real production environment you’ll have to add allowed_principals to whitelist the account ID from the customer.

resource "aws_vpc_endpoint_service" "privatelink" {

acceptance_required = false

network_load_balancer_arns = [module.nlb.nlb_arn]

#checkov:skip=CKV_AWS_123:For this demo I don't need to configure the VPC Endpoint Service for Manual Acceptance

}

Everything is ready from API provider perspective. We have deployed the VPC, ECS infrastructure, ALB, NLB and PrivateLink.

The customer its VPC can be deployed using the same network module. The customer needs a VPC endpoint to connect to PrivateLink.

The VPC endpoint Fully Qualified Domain Name (FQDN) will point to the AWS PrivateLink endpoint FQDN.

This creates an elastic network interface to the VPC endpoint service that the DNS endpoints can access.

resource "aws_vpc_endpoint" "vpce" {

vpc_id = module.network_added.vpc_id

service_name = aws_vpc_endpoint_service.privatelink.service_name

vpc_endpoint_type = "Interface"

security_group_ids = [aws_security_group.vpce_sg.id]

subnet_ids = module.network_added.public_subnets

}

At last I’m deploying an EC2 in the customer VPC (public subnet) to test the integration.

Here for I’m using this Terraform module.

Update the keyvariable in variables.tfwith the name of your existing AWS SSH key. You can also update the code to attach the correct role to the instance and use AWS System Manager Session Manager.

module "ec2_instance_added" {

source = "terraform-aws-modules/ec2-instance/aws"

version = "~> 3.0"

name = "test-instance-vpc-2"

associate_public_ip_address = true

ami = "ami-05cd35b907b4ffe77" # eu-west-1 specific

instance_type = "t2.micro"

key_name = var.key

vpc_security_group_ids = [aws_security_group.ssh_sg_added.id]

subnet_id = element(module.network_added.public_subnets, 0)

}

Test Setup

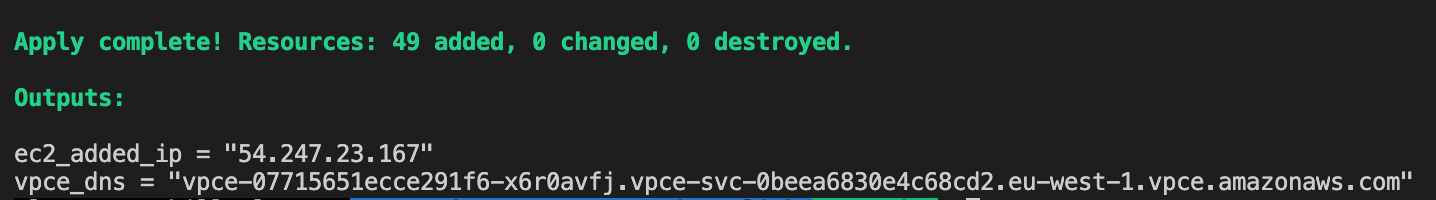

Deploy the Terraform stack to test the setup.

$ git clone https://github.com/lvthillo/aws-ecs-privatelink.git

$ cd aws-ecs-privatelink

$ terraform init

$ terraform apply

Check the Terraform outputs and SSH to the EC2 instance deployed in the customer VPC. Then try to curlthe VPC endpoint.

$ ssh -i your-key.pem ec2-user@54.247.23.167

$ curl http://vpce-07715651ecce291f6-x6r0avfj.vpce-svc-0beea6830e4c68cd2.eu-west-1.vpce.amazonaws.com/api/tasks

[{"id": 1, "name": "task1", "description": "This is task 1"}, {"id": 2, "name": "task2", "description": "This is task 2"}, {"id": 3, "name": "task3", "description": "This is task 3"}]

$ curl http://vpce-07715651ecce291f6-x6r0avfj.vpce-svc-0beea6830e4c68cd2.eu-west-1.vpce.amazonaws.com/api/task/2

{"id": 2, "name": "task2", "description": "This is task 2"}

Conclusion

That was it! Our customer is able to access our API hosted in ECS using PrivateLink.

All communication remains inside AWS.

There are some important caveats to remember which are described at the bottom of this post.

Don’t forget to destroy this demo stack using terraform destroy!

I hope you enjoyed it!