Introduction

As a freelance Cloud & DevOps engineer I come into contact with different companies and different types of solutions. At one of my clients I’m part of the Cloud Governance team. The company has a lot of teams which all have their own AWS accounts. They follow the principle of YBIYRI (You Build It, You Run It). A team gets complete freedom to choose which IaC tool or programming language they will use to create their solutions. There are more than 200 AWS accounts which all contain different tools and applications. Quickly it became clear that there was need for some sort of governance for security improvements, tagging compliance and cost optimization.

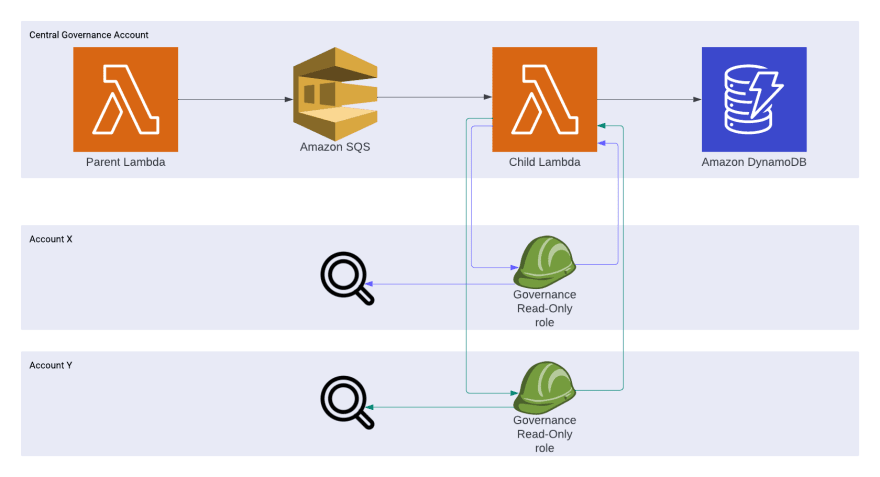

That’s why we initially developed the following solution.

We used a parent Lambda which retrieved all active AWS account IDs and put them on an SQS queue. This parent Lambda ran once each night. A child Lambda was triggered at least once for each account. The child Lambda assumed a role within each target account. We created this role in each account of our organization using Organizational StackSets. The role could be assumed from our central governance account and was used to retrieve the necessary information from within the target account. At last the lambda was writing the data to DynamoDB. We had to query this DynamoDB table and warn the teams from which their accounts were not compliant to our rules.

The problems with this solution were:

- We had to warn teams manually by sending emails.

- The solution was not “real-time” because it only ran once each night.

- There was a lack of visibility for the teams to see for which rules they were or weren’t compliant.

- The child Lambda execution time was high for big accounts.

There was clearly a need for a service which offered more visibility and where the rules could run not only scheduled but also event based. A combination of AWS Config & Security Hub seemed like the perfect fit. Teams were already familiar with Security Hub for general security findings. That’s why we were very happy when AWS where announced the integration between AWS Config and Security Hub. We started the investigation to see if we could convert our old solution to AWS Organizational Config Rules. Often an Organizational AWS Managed Config Rule seemed a great fit.

AWS Organizational Managed Config Rule

The following managed rule is much easier and much more efficient than our old solution. It will check if each loggroup has a retention period set. It’s a managed rule offered by AWS.

AWSTemplateFormatVersion: "2010-09-09"

Description: "Deploy Organizational AWS Managed Config Rule."

Resources:

BasicOrganizationConfigRule:

Type: "AWS::Config::OrganizationConfigRule"

Properties:

OrganizationConfigRuleName: "OrganizationConfigRuleName"

OrganizationManagedRuleMetadata:

RuleIdentifier: "CW_LOGGROUP_RETENTION_PERIOD_CHECK"

Description: "Check if CW Loggroup has a retention period set."

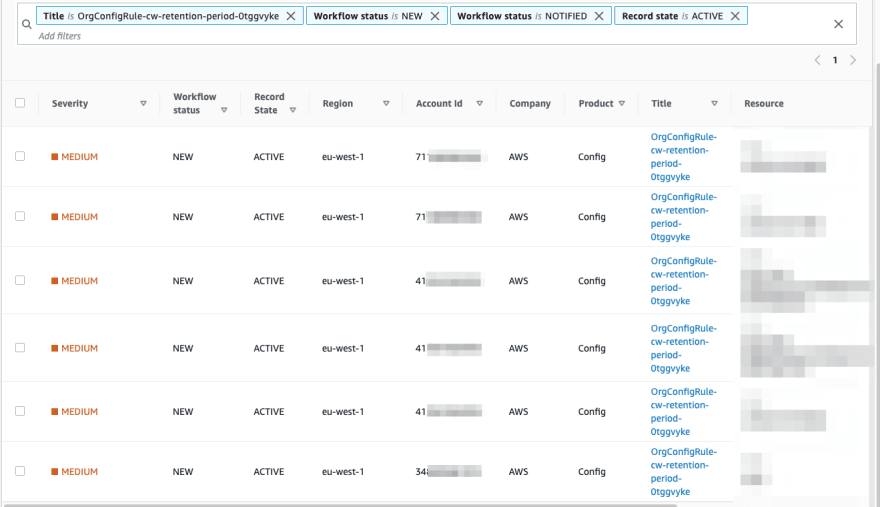

We deploy the following CloudFormation stack in our aws-config-delegated-admin account. This will deploy the rule in each account of our organization. In our aws-security-hub-delegated-admin account we can see all results combined in Security Hub which increases the visibility for the teams.

Some nice-to-have feature would be to setup an integration between Security Hub and AWS Chatbot. This is something we will investigate in the near future. Now let’s conclude with some general remarks about AWS Config Managed Rules.

Advantages:

- Easy to implement and schedule.

- Many managed rules offered by AWS.

- No need to deploy a config role in each account.

Disadvantages:

- Not possible to do customizations.

- Not all trigger types are possible.

AWS Organizational Custom Config Rule backed by Lambda

To allow us to implement some more advanced customizations we are also using AWS Custom Config Rules backed by Lambda.

CustomConfigRuleApplicationTag:

Type: "AWS::Config::OrganizationConfigRule"

Properties:

OrganizationConfigRuleName: "custom-config-rule"

OrganizationCustomRuleMetadata:

Description: "Validate whether xxx."

LambdaFunctionArn: !GetAtt LambdaFunction.Arn

OrganizationConfigRuleTriggerTypes:

# Run Rule each hour

- "ScheduledNotification"

# Run rule on each EC2 config change

- "ConfigurationItemChangeNotification"

MaximumExecutionFrequency: "One_Hour"

ResourceTypesScope:

- "AWS::EC2::Instance"

We can trigger config rules on a scheduled base or when a resource change occurs (or both). We use the code below when the rule is triggered by a config change. We try to collect the most recent change of the affected resource using get_resource_config_history.

def get_configuration(resource_type, resource_id, configuration_capture_time):

result = AWS_CONFIG_CLIENT.get_resource_config_history(

resourceType=resource_type,

resourceId=resource_id,

laterTime=configuration_capture_time,

limit=1)

configurationItem = result['configurationItems'][0]

return convert_api_configuration(configurationItem)

For our scheduled events we use list_discovered_resources to get the affected resources in a particular account.

def list_config_discovered_resources_per_resource_type(

aws_config_client, resource_type

):

"""Get list of current resources in Config per resource type"""

resources = []

ldr_pagination_token = ""

while True:

discovered_resources_response = aws_config_client.list_discovered_resources(

resourceType=resource_type,

includeDeletedResources=False,

nextToken=ldr_pagination_token,

)

resources.extend(discovered_resources_response["resourceIdentifiers"])

if "nextToken" in discovered_resources_response:

ldr_pagination_token = discovered_resources_response["nextToken"]

else:

break

return resources

Complete and similar examples can be found in the official docs.

Advantages:

- Using Lambda we can monitor nearly everything we want.

- It can be triggered based on a schedule expression or by a configuration change.

- Custom Rules also integrate with Security Hub.

Disadvantages:

- We need to deploy an IAM Role in each account (using StackSets) which can be assumed from within the Lambda.

- A Lambda can become pretty complex (especially when we need some combination of scheduled executions and executions triggered by a resource configuration change).

AWS Organizational Custom Config Rule backed by Guard

Currently, we’re also exploring the brand new AWS Config rules backed by guard. Now you can write rules using guard which is a policy-as-code language. Below you can find some example of a Guard Rule which we are testing.

let resource_whitelist = [

...

"AWS::EC2::EIP",

"AWS::EC2::Instance",

"AWS::EC2::SecurityGroup",

"AWS::S3::Bucket",

"AWS::SNS::Topic",

"AWS::SQS::Queue",

...

]

rule resource_is_tagged when

resourceType in %resource_whitelist {

tags["Application"] !empty or

tags["application"] !empty or

tags["App"] !empty or

tags["app"] !empty

tags["Stage"] !empty or

tags["stage"] !empty

}

This rule can be used to monitor our tagging compliancy. All resources in the resource_whitelist should be tagged with ((A/a)pplication OR (A/a)pp) AND (S/s)tage. If a resources is not part of the whitelist, it will be ignored.

We use the cfn-guard CLI to test the integration.

We store AWS Config events in a .yaml file. Below you can find an example of an AWS Config event for an AWS::EC2::SecurityGroup. Next we define what we expect to happen when we apply the rule on the event.

- name: TagCase1

input: {

"relatedEvents": [],

"relationships": [

{

"resourceId": "vpc-xxx",

"resourceName": null,

"resourceType": "AWS::EC2::VPC",

"name": "Is contained in Vpc"

}

],

"configuration": {

"description": "test",

"groupName": "test",

"ipPermissions": [],

"ownerId": "xxx",

"groupId": "sg-xxx",

"ipPermissionsEgress": [

{

"ipProtocol": "-1",

"ipv6Ranges": [],

"prefixListIds": [],

"userIdGroupPairs": [],

"ipv4Ranges": [

{

"cidrIp": "0.0.0.0/0"

}

],

"ipRanges": [

"0.0.0.0/0"

]

}

],

"tags": [

{

"key": "app",

"value": "something"

},

{

"key": "stage",

"value": "something"

}

],

"vpcId": "vpc-xxx"

},

"supplementaryConfiguration": {},

"tags": {

"app": "something",

"stage": "something"

},

"configurationItemVersion": "1.3",

"configurationItemCaptureTime": "2022-08-11T19:37:42.753Z",

"configurationStateId": 111,

"awsAccountId": "xxx",

"configurationItemStatus": "OK",

"resourceType": "AWS::EC2::SecurityGroup",

"resourceId": "sg-xxx",

"resourceName": "test",

"ARN": "arn:aws:ec2:eu-west-1:xxx:security-group/sg-xxx",

"awsRegion": "eu-west-1",

"availabilityZone": "Not Applicable",

"configurationStateMd5Hash": "",

"resourceCreationTime": null,

"CONFIG_RULE_PARAMETERS": {}

}

expectations:

rules:

resource_is_tagged: PASS

- name: TagCase2

input: {

"relatedEvents": [],

"relationships": [

{

"resourceId": "vpc-xxx",

"resourceName": null,

"resourceType": "AWS::EC2::VPC",

"name": "Is contained in Vpc"

}

],

"configuration": {

"description": "test",

"groupName": "test",

"ipPermissions": [],

"ownerId": "xxx",

"groupId": "sg-xxx",

"ipPermissionsEgress": [

{

"ipProtocol": "-1",

"ipv6Ranges": [],

"prefixListIds": [],

"userIdGroupPairs": [],

"ipv4Ranges": [

{

"cidrIp": "0.0.0.0/0"

}

],

"ipRanges": [

"0.0.0.0/0"

]

}

],

"tags": [

{

"key": "app",

"value": "something"

},

{

"key": "stagee",

"value": "something"

}

],

"vpcId": "vpc-xxx"

},

"supplementaryConfiguration": {},

"tags": {

"app": "something",

"stagee": "something"

},

"configurationItemVersion": "1.3",

"configurationItemCaptureTime": "2022-08-11T19:37:42.753Z",

"configurationStateId": 111,

"awsAccountId": "xxx",

"configurationItemStatus": "OK",

"resourceType": "AWS::EC2::SecurityGroup",

"resourceId": "sg-xxx",

"resourceName": "test",

"ARN": "arn:aws:ec2:eu-west-1:xxx:security-group/sg-xxx",

"awsRegion": "eu-west-1",

"availabilityZone": "Not Applicable",

"configurationStateMd5Hash": "",

"resourceCreationTime": null,

"CONFIG_RULE_PARAMETERS": {}

}

expectations:

rules:

resource_is_tagged: FAIL

The rule will pass or fail depending on the tag values. We expect the second case to fail because there is no stage tag but a stagee tag which is not valid.

$ cfn-guard test -r rule.guard -t tests.yaml

Test Case #1

Name: "TagCase1"

PASS Rules:

resource_is_tagged: Expected = PASS, Evaluated = PASS

Test Case #2

Name: "TagCase2"

PASS Rules:

resource_is_tagged: Expected = FAIL, Evaluated = FAIL

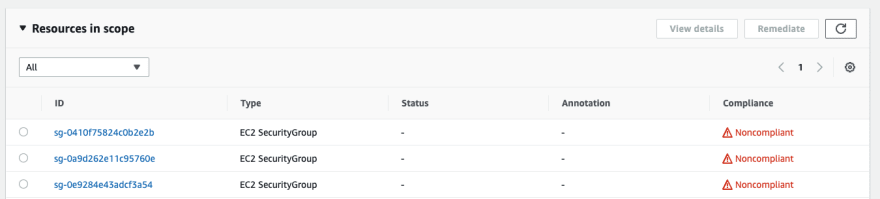

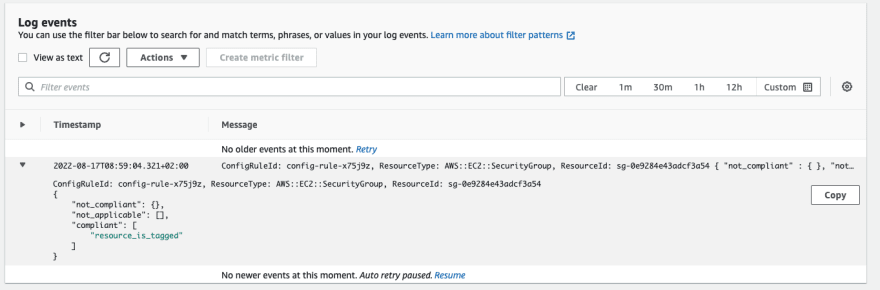

We deploy this rule in our organization. You can enable some useful debug logs to verify the events.

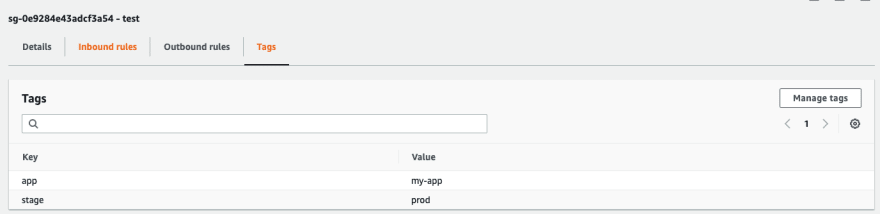

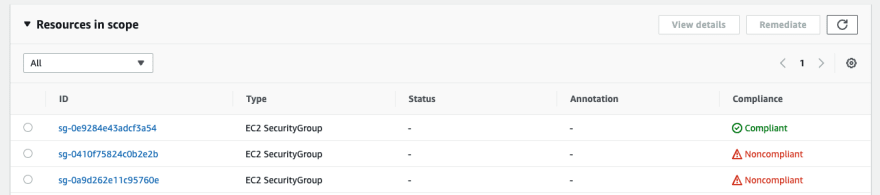

Now we add the correct tags to one of the security groups.

Advantages:

- It’s easy to learn the Guard Rules syntax.

- Guard rules are easier to maintain than a custom lambda.

- No need to deploy a config role in each account.

- No programming knowledge required.

- Custom Rules also integrate with Security Hub.

Disadvantages:

- My rule is not triggered for SNS or SQS changes (opened a case w AWS).

- API endpoint to deploy an organizational Config Rule backed by Guard is not working for SDK/CLI in eu-west-1 .

- No option to define a retention period for the CloudWatch debug log group.

- No CloudFormation support (yet)

- Can not be triggered on a scheduled base.

Conclusion

Guard Rules seems promising but not fully production ready for our use case. In the future they can replace most of our Custom Config Rules backed by Lambda but not all of them. Some of these Lambda backed Custom Config Rules need to connect with non-AWS systems. AWS Managed Config Rules help us to increase our velocity to release new rules and allowed us to remove a lot of custom code. Thanks to AWS Config we have made great strides in our Cloud Governance journey.

All names and examples referenced in the story have been changed for demo purposes. Also screenshots are taken from demo accounts